Introduction

For the past few weeks, I’ve been wondering how does memory work, what’s the meaning of virtual memory, what’s a memory management unit (MMU), what’s the difference between static and dynamic memory allocation, and, how malloc and free works.

That means we have a lot to talk about, so without further ado, let’s get started.

Computer Memory

Computer memory is any physical device capable of storing information temporarily, like RAM (random access memory), or permanently, like ROM (read-only memory). Memory devices utilize integrated circuits and are used by operating systems, software, and hardware.

Each device in a computer operates at different speeds, and computer memory gives your computer a place to access data quickly. If the CPU had to wait for a secondary storage device, like a hard disk drive, a computer would be much slower.

Memory can be either volatile or non-volatile memory. Volatile memory loses its contents when the computer or hardware device loses power. Computer RAM is an example of volatile memory. It is why if your computer freezes or reboots when working on a program, you lose anything that wasn't saved. Non-volatile memory, sometimes abbreviated as NVRAM, keeps its contents even if the power is lost. EPROM is an example of non-volatile memory.

As mentioned above, because RAM is volatile memory, when the computer loses power, anything stored in RAM is lost. For example, while working on a document, it is stored in RAM. If it data was not previously saved to non-volatile memory (e.g., the hard drive), the data would be lost when the computer loses power.

The memory comes to use When a program, such as your Internet browser, is open, it is loaded from your hard drive and placed into RAM. This process allows that program to communicate with the processor at higher speeds. Anything you save to your computers, such as pictures or videos, is sent to your hard drive for storage.

Virtual Memory

Computers have a finite amount of random access memory or RAM, so memory can run out, especially when running multiple programs at the same time. Virtual memory makes it possible to compensate for a computer's physical memory shortages by temporarily transferring data from RAM to disk storage. With virtual memory, a system can load larger programs, or multiple programs running at the same time, operating as if it has infinite memory. Virtual memory can be handled through either paging or segmenting.

Paging divides memory into sections or paging files. When a computer uses up its RAM, pages that aren't in use are transferred to the section of the hard drive designated for virtual memory, using a swap file.

Segmentation divides virtual memory into segments of different lengths. Segments not in use in memory can be moved to virtual memory space on the hard drive. Some virtual memory systems combine both segmentation and paging. The primary benefit is that memory is used more efficiently.

With virtual memory, computers can run programs larger than physical memory without the added cost or hardware. And it frees applications for managing shared memory, which can sometimes lead to accidental overwrites, or sharing of sensitive information. However, virtual memory can slow a computer because data has to be mapped between virtual and physical memory, which requires extra support. So, it's generally better to have as much physical memory as possible.

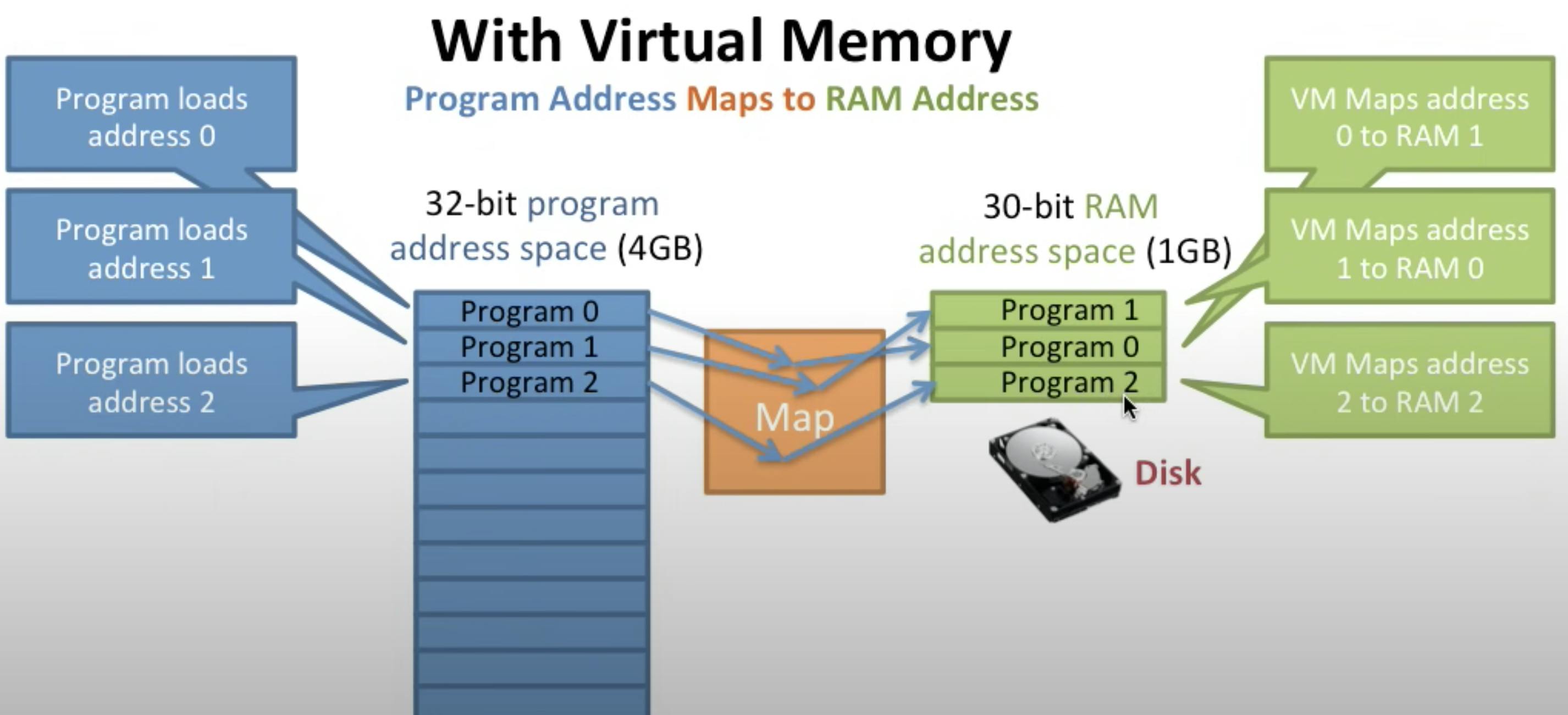

Virtual memory is also all about indirection, so let’s take the following example to understand it better. TL;DR: We’re going to map some of the program's address space to disk when it doesn’t fit and when we need it we’ll bring it into memory.

So with virtual memory, the program address is going to map to the RAM addresses. So the program goes and tries to load address 0. The Map then will say ah ok address 0 is over here you can access it. Then the virtual memory maps the program’s address 0 to RAM address 1.

Next, the program wants to access address 1 and since address 1 is also free the Map will map address 1 to RAM address 0 with no problems. The same will happen with program address 2.

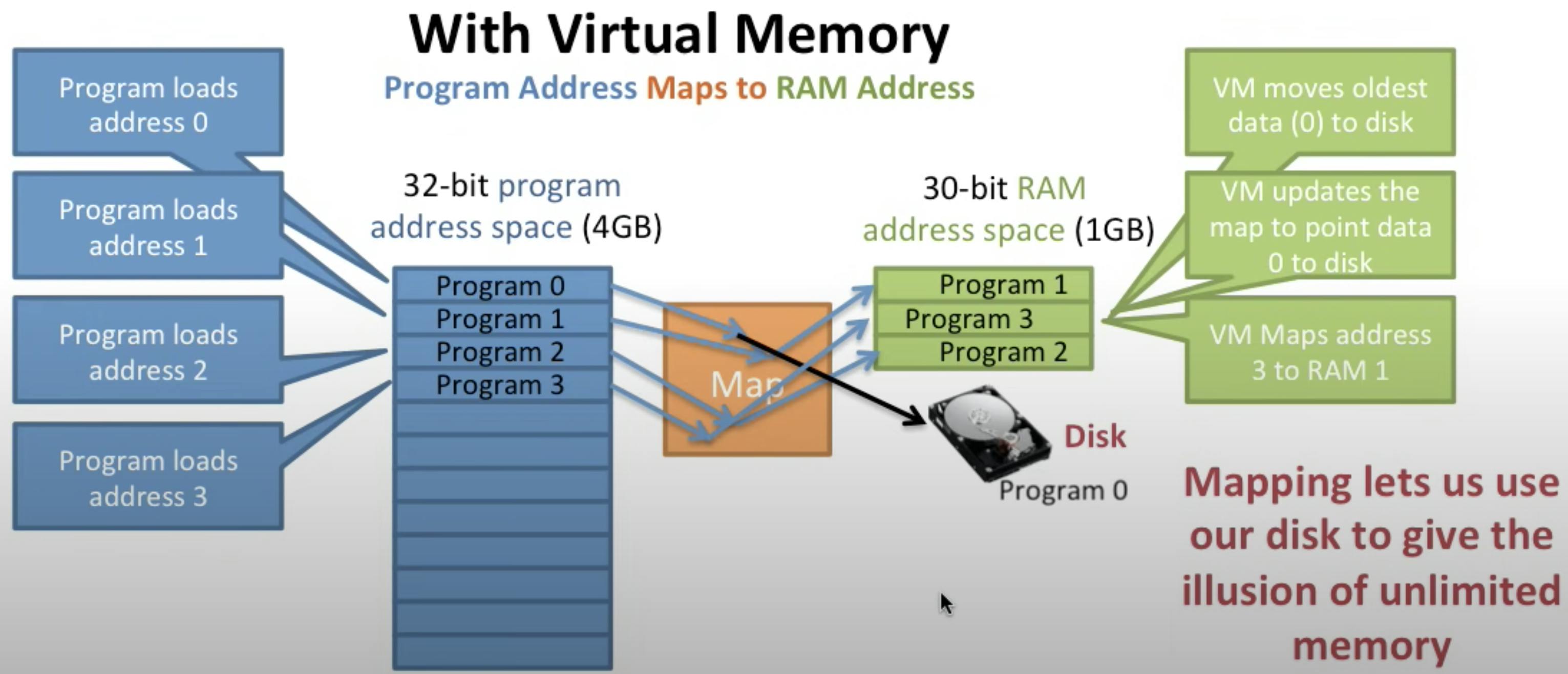

Now the physical memory is full. What will happen if we try to access a new address for example address 3?

Here's where it gets fun. So since the physical memory is full, the Map will inform us that we don't have any more space in our physical memory however, it can move the data around so it's going to go put it on the disk.

That means virtual memory is going to find the oldest piece of data in memory in this case it was program 0 and it’s going to move it out to the disk.

This is called a page out as it's taking this page of memory and writing it out to disk.

Then it's going to go and update the Map so the Map will point out to the new location on disk. Finally, after we've freed up this memory here now we can go ahead and access the program at address 3. Therefore by having this mapping we can use our disk to give us the illusion of unlimited memory and that’s virtual memory in short.

Question: Regarding virtual memory performance, what's going to happen to the program performance when the data it needs is on the disk?

Memory Management Unit (MMU)

A memory management unit (MMU) is a computer hardware component that handles all memory and caching operations associated with the processor. In other words, the MMU is responsible for all aspects of memory management. It is usually integrated into the processor, although in some systems it occupies a separate IC (integrated circuit) chip.

The work of the MMU can be divided into three major categories:

- Hardware memory management, which oversees and regulates the processor’s use of RAM (random access memory) and cache memory.

- OS (operating system) memory management, which ensures the availability of adequate memory resources for the objects and data structures of each running program at all times.

- Application memory management, allocates each program’s required memory and then recycles freed-up memory space when the operation concludes.

Static and Dynamic Memory Allocation

As discussed earlier, memory allocation is a process by which computer programs and services are assigned with physical or virtual memory space. The memory allocation is done either before or at the time of program execution. That means there are two types of memory allocations:

Compile-time or Static Memory Allocation: In which static memory is allocated for declared variables by the compiler because the size of the static variable is already known in compile time.

Run-time or Dynamic Memory Allocation: In the dynamic allocation of memory, space is allocated by using functions like

calloc()andmalloc()when the value is returned by functions and assigned to pointer variables. We use dynamic allocation when the sizes of our variables are not content and might change (I.e. grow or shrink) during the execution of the program.

It’s important to note that there are two types of available memories (stack and heap):

- Static memory allocation can only be done on the stack.

- Dynamic memory allocation, on the other hand, can be done on both stack and heap. An example of dynamic allocation to be done on the stack is recursion where the functions are put into the call stack in order of their occurrence and popped off one by one on reaching the base case.

Allocating Dynamic Memory & malloc()

How Dynamic Allocation Works

The memory allocated to each process is composed of multiple segments, as can be seen in the following diagram:

Each process has a program address space in which you get the stack at the top in a higher addresses space and you have the program code, global variables, and the heap down at the bottom.

As we can see there is a lot of space in between the stack and heap address space. On a 64-bit machine, you can address more than 17 billion gigabytes of memory which, of course, is more memory than you have.

The top end of the heap is called the program break and we can move the program break in C by using sbrk() and brk() syscalls.

At first, we can use void *sbrk(intptr_t increment); as it increments the program break by the desired increment bytes and it’ll return a new memory chunk with the desired address.

Then we can use int brk(void *addr);, as this call will move the program break to the address pointed by addr.

To allocate some memory we can do something like the following:

void* memory = sbrk(4096);

What will happen is basically sark will return the address of the previous break so it’s going to move the break by some amount, in this case, 4096 bytes, but it’s going to return the address of where the break used to be.

Notice that we moved the program break by 4KB. We could have only moved it by a smaller amount however they will both have the same effect as it will effectively get rounded up to the next 4K. That’s because modern virtual memory systems use paging and the page size is 4K.

mmap()

mmap() is similar in that it requests memory from the kernel as it says I need more memory in my address space to be usable but rather than just allowing you to bump up or down the program break it gives you more options such as where we want the new memory block to be allocated, defining a page size, choosing a read-only or read and write memory, and choosing whether we want the memory to be shared or private to a particular process.

How Memory Allocators Work. For example: malloc & free

If we decided to write our own malloc, we can maybe think of it as a doubly-linked list. We will keep a doubly-linked list of free memory blocks and every time malloc gets called, we traverse the linked list looking for a block with at least the size requested by the user. We can obtain the size of the memory block by its block metadata.

In this implementation there will be 3 probabilities:

- If a block with the exact requested size exists, we remove it from the free list and return its address to the user.

- If the block is larger, we split it into two blocks, return the one with the requested size to the user, and add the newly created block to the list

- If we are unable to find a block on the list, we must “ask” the OS for more memory, by using the

sbrksyscall.- After the call to

sbrkwe create a new block with the allocated size. - Since we may have allocated much more memory than the user requested, we split this new block and return the one with the exact same size as requested.

- After the call to

The free function, on the other hand, will be given a pointer that was previously malloced to the user. It’ll find its metadata and add it to our free linked list again. After that we can do some cleanup so for every two continuous blocks found, we merge both blocks to reduce our total overhead of the metadata to keep.

In this article Implementing malloc and free the author goes into a little bit more details on the implementation details of malloc and free and he even provides some code.

Conclusion

In this blog, we talked a little bit about how memory works and I think we should have a better understanding now of what dynamic allocation is and how it works.

What I recommend you to do is to take a look at the resources sections and try to go through them, I’m sure you’ll learn a lot.

Resources

- Memory

- What is Virtual Memory? What Does it Do?

- Virtual Memory: 3 What is Virtual Memory?

- Virtual Memory: 4 How Does Virtual Memory Work?

- What is a Memory Management Unit (MMU)? - Definition from Techopedia

- What is Dynamic Memory Allocation? - GeeksforGeeks

- Difference between Static and Dynamic Memory Allocation in C - GeeksforGeeks

- Implementing

mallocandfree - How processes get more memory. (mmap, brk)